Difference between revisions of "Behavior-centric versus reinforcer-centric descriptions of behavior"

(include display script) |

|||

| (12 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

< | <hide> | ||

[[responds to::2006-Rachlin]] | |||

[[keyname::2006-07-16-Davison]] | |||

[[Title::Behavior-centric versus reinforcer-centric descriptions of behavior]] | |||

[[author::Michael Davison]] | |||

[[author/affil::The University of Auckland, New Zealand]] | |||

[[cite/author::Davison 2006]] | |||

[[Date::2006-07-16]] | |||

[[author/ref::Davison]] | |||

[[listing section::behavioral economics]] | |||

</hide | |||

[[lead-in::The paper is a brilliant tour-de-force, but a subtext to the paper is what I will call the behavior-centric view. In this view, stimuli are remembered until a response is emitted, and reinforcers reach back in time to effect this response in the presence of the remembered stimulus...]] | |||

</hide>{{page/spec/response}} | |||

==Full Text== | |||

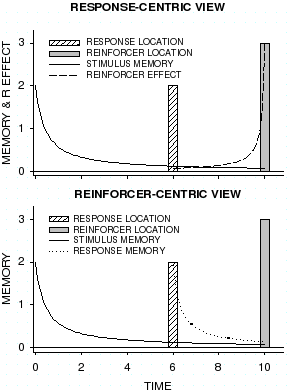

In a recent paper, Rachlin (2006) described how a discounting view pervades delay of reinforcement, memory, and choice. The paper is a brilliant tour-de-force, but a subtext to the paper is what I will call the behavior-centric view. In this view, stimuli are remembered until a response is emitted, and reinforcers reach back in time to effect this response in the presence of the remembered stimulus (see Figure 1, upper graph). As a conceptualization of understanding behavior I have no quibbles with this approach. But as a model of how an animal ''adapts'' to, or ''learns'' about, situations with stimulus-behavior delays and response-reinforcer delays, the model has the problem of reinforcer effects spreading backward in time. Physiologically, the process cannot act in this way, and physiology must require that the memory of an event flows forward in time, rather than the reinforcer effect flowing backwards. But the response-centric view is the dominant view in the study of delayed reinforcers (Mazur, 1987) and of self control (Green & Myerson, 2004). | |||

A simpler, much more likely, and physiologically-consistent conceptualization of the adaptation to these delays is shown in Figure 1 (lower graph). This is the reinforcer-centric view. In this view, at the point at which a reinforcer is delivered, it is the conjunction of the memories of both the stimulus and the response at the time of reinforcer delivery that is “strengthened” and, I presume, remembered and subsequently accessed and used. This approach suggests a different, and more parsimonious, mechanism for learning and activity that is squarely based on memory. When reinforcers are delayed, it is the residual memory of responses times the value of the reinforcers that will describe the effects of reinforcer delay on behavior. When responses are delayed following stimuli, it is the residual memory of the stimulus times the value of the reinforcer that will describe the stimulus-reinforcer conjunction, providing a role for stimulus-reinforcer relations (as in momentum theory). It seems reasonable to assume that stimulus-behavior-reinforcer correlations are learned at the point of reinforcer delivery, and further, that, given the stimuli, behaviors and reinforcers are discriminable from other learned correlations, the presentation or emission of any of these engenders the learned correlation. Thus, behavior can be reinstated by presenting the reinforcer or the stimulus to the animal—and maybe by leading an animal to emit the response. | |||

The reinforcer-centric view highlights important aspects of behavior that have been largely forgotten –for instance, while we generally accept that the discriminability of a stimulus from other stimuli will affect memory for that stimulus, the discriminability of a response from other responses will also have an effect (for a treatment of this, see Davison & Nevin, 1999; and Nevin, Davison & Shahan, 2005). Equally, the discriminability of this reinforcer from others will be critical, a possibility entertained by Davison and Nevin as the basis of the differential-outcomes effect. The mechanistic aspect of this conception leads, after training, to a correlation between events extended in time that describes steady-state behavior—but the molecular, mechanistic, aspect remains (and must do so) so that animals can learn to change their behavior in changed circumstances (see, for example, Krägeloh & Davison, 2003)—that is, the extended correlations must be able to change. The extended correlations predict steady-state behavior, the molecular aspects describes changes between steady-states. The molecular aspect is not absent in the steady-state, rather its significance is attenuated by learned correlations. | |||

[[Image:DavisonRachlin2006 fig 1.png|left|frame|413px|'''Figure 1'''. Two views of behavior. The upper graph shows the response-centric view, in which reinforcer effects spread backwards in time. The lower graph shows the reinforcer-centric view in which the memories of both stimuli and responses are correlated with the reinforcer value at the time of reinforcer delivery. <u>R Effect</u> is Reinforcer Effect.]]The situation is, of course, much more dynamic than depicted in Figure 1. Over time, many differing responses are emitted in many differing stimulus conditions, only some of which come to have a positive correlation with reinforcer (or punisher) delivery as learning develops. More highly discriminable responses and stimuli will, on this view, develop initially greater positive correlations. | |||

Interestingly, the reinforcer-centric view changes nothing about the quantitative analysis discussed by Rachlin (2006). In particular, “self-control” and preference reversal over time still occurs. | |||

There has been considerable debate recently as to whether the behaviour-environment relation is better understood as a molecular mechanistic process or as a process extended over time (Dinsmoor, 2001, and commentaries; Baum, 2002). While the present approach does not argue that the “termination of stimuli positive correlated with shock and the production of stimuli negatively correlated with shock [Dinsmoor, p. 311]” are positively reinforcing, it does make the suggestion, similar at face value, that memories for stimuli and responses are available at reinforcement or punishment. The present approach bridges temporal distance by | |||

memory: it is the degraded memory that is temporally contiguous with the reinforcer or punisher, and it is the resultant size of the relative, and necessarily temporally extended correlation between these degraded memories that affects subsequent performance. This, I suggest, is what occurs during learning and adaptation to a new environment. But, the correlations being based on extended relations over time means that when adaptation has substantially occurred, it is these molar correlations that mostly (but not entirely) control behaviour. Which approach—molecular or extended—is best depends very much on whether we are interested in describing behaviour during learning and adaptation, or whether we wish to describe steady-state behaviour. In this I find myself in very substantial agreement with Hineline (2001) who argued that molecular and extended (molar) analyses are not mutually exclusive. | |||

Perhaps we should cease telling our students that “reinforcers increase the probability of responses that they follow” but rather that “response probability is increased when responses are followed by reinforcers”. | |||

Michael Davison | |||

The University of Auckland, New Zealand | |||

===References=== | |||

* Baum, W. M. (2002). From molecular to molar: A paradigm shift in behavior analysis. ''Journal of the Experimental Analysis of Behavior, 78'', 95-116. | |||

* Davison, M., & Nevin, J.A. Stimuli, reinforcers, and behavior: An integration. Monograph. ''Journal of the Experimental Analysis of Behavior, 71'', 439-482. | |||

* Dinsmoor, J. A. (2001). Stimuli inevitably generated by behavior that avoids electric shock are inherently reinforcing. ''Journal of the Experimental Analysis of Behavior, 75'', 311-333. | |||

* Green, L. & Myerson, J. (2004). A discounting framework for choice with delayed and probabilistic rewards. ''Psychological Bulletin, 130'', 769-792 | |||

* Hineline, P.N. (2001). Beyond the molar-molecular distinction: We need multiscaled analyses. ''Journal of the Experimental Analysis of Behavior, 75'', 342-347. | |||

* Krägeloh, C. U. & Davison, M. (2003). Concurrent-schedule performance in transition: Changeover delays and signaled reinforcer ratios. ''Journal of the Experimental Analysis of Behavior, 79'', 87-109. | |||

* Mazur, J.E. (1987). An adjusting procedure for studying delayed reinforcement. In M. L. Commons, J. E. Mazur, J. A. Nevin & H. Rachlin (Eds.), ''Quantitative analyses of behaviour, Vol. 5: The effects of delay and of intervening events on reinforcement value'' (pp. 55-73). Mahwah, NJ: Erlbaum. | |||

* Nevin, J.A., Davison, M., & Shahan, T.A. (2005). A theory of attending and reinforcement in conditional discriminations. ''Journal of the Experimental Analysis of Behavior, 84'', 281-303. | |||

* Rachlin, H. (2006). [[Notes on discounting]]. ''Journal of the Experimental Analysis of Behavior, 85'', 425-435. | |||

===Acknowledgements=== | |||

Thanks to William M. Baum, Douglas Elliffe, and J. Anthony Nevin for many productive conversations on these topics. The views expressed, though, remain my own and probably will continue so to be. | |||

Latest revision as of 16:50, 25 July 2020

- Title: Behavior-centric versus reinforcer-centric descriptions of behavior

- Author(s): Michael Davison

- Date: 16 July 2006

- Keyname: 2006-07-16-Davison

- Responds to: Notes on discounting

- Lead-in: The paper is a brilliant tour-de-force, but a subtext to the paper is what I will call the behavior-centric view. In this view, stimuli are remembered until a response is emitted, and reinforcers reach back in time to effect this response in the presence of the remembered stimulus...

no responses found

Full Text

In a recent paper, Rachlin (2006) described how a discounting view pervades delay of reinforcement, memory, and choice. The paper is a brilliant tour-de-force, but a subtext to the paper is what I will call the behavior-centric view. In this view, stimuli are remembered until a response is emitted, and reinforcers reach back in time to effect this response in the presence of the remembered stimulus (see Figure 1, upper graph). As a conceptualization of understanding behavior I have no quibbles with this approach. But as a model of how an animal adapts to, or learns about, situations with stimulus-behavior delays and response-reinforcer delays, the model has the problem of reinforcer effects spreading backward in time. Physiologically, the process cannot act in this way, and physiology must require that the memory of an event flows forward in time, rather than the reinforcer effect flowing backwards. But the response-centric view is the dominant view in the study of delayed reinforcers (Mazur, 1987) and of self control (Green & Myerson, 2004).

A simpler, much more likely, and physiologically-consistent conceptualization of the adaptation to these delays is shown in Figure 1 (lower graph). This is the reinforcer-centric view. In this view, at the point at which a reinforcer is delivered, it is the conjunction of the memories of both the stimulus and the response at the time of reinforcer delivery that is “strengthened” and, I presume, remembered and subsequently accessed and used. This approach suggests a different, and more parsimonious, mechanism for learning and activity that is squarely based on memory. When reinforcers are delayed, it is the residual memory of responses times the value of the reinforcers that will describe the effects of reinforcer delay on behavior. When responses are delayed following stimuli, it is the residual memory of the stimulus times the value of the reinforcer that will describe the stimulus-reinforcer conjunction, providing a role for stimulus-reinforcer relations (as in momentum theory). It seems reasonable to assume that stimulus-behavior-reinforcer correlations are learned at the point of reinforcer delivery, and further, that, given the stimuli, behaviors and reinforcers are discriminable from other learned correlations, the presentation or emission of any of these engenders the learned correlation. Thus, behavior can be reinstated by presenting the reinforcer or the stimulus to the animal—and maybe by leading an animal to emit the response.

The reinforcer-centric view highlights important aspects of behavior that have been largely forgotten –for instance, while we generally accept that the discriminability of a stimulus from other stimuli will affect memory for that stimulus, the discriminability of a response from other responses will also have an effect (for a treatment of this, see Davison & Nevin, 1999; and Nevin, Davison & Shahan, 2005). Equally, the discriminability of this reinforcer from others will be critical, a possibility entertained by Davison and Nevin as the basis of the differential-outcomes effect. The mechanistic aspect of this conception leads, after training, to a correlation between events extended in time that describes steady-state behavior—but the molecular, mechanistic, aspect remains (and must do so) so that animals can learn to change their behavior in changed circumstances (see, for example, Krägeloh & Davison, 2003)—that is, the extended correlations must be able to change. The extended correlations predict steady-state behavior, the molecular aspects describes changes between steady-states. The molecular aspect is not absent in the steady-state, rather its significance is attenuated by learned correlations.

The situation is, of course, much more dynamic than depicted in Figure 1. Over time, many differing responses are emitted in many differing stimulus conditions, only some of which come to have a positive correlation with reinforcer (or punisher) delivery as learning develops. More highly discriminable responses and stimuli will, on this view, develop initially greater positive correlations.

Interestingly, the reinforcer-centric view changes nothing about the quantitative analysis discussed by Rachlin (2006). In particular, “self-control” and preference reversal over time still occurs.

There has been considerable debate recently as to whether the behaviour-environment relation is better understood as a molecular mechanistic process or as a process extended over time (Dinsmoor, 2001, and commentaries; Baum, 2002). While the present approach does not argue that the “termination of stimuli positive correlated with shock and the production of stimuli negatively correlated with shock [Dinsmoor, p. 311]” are positively reinforcing, it does make the suggestion, similar at face value, that memories for stimuli and responses are available at reinforcement or punishment. The present approach bridges temporal distance by memory: it is the degraded memory that is temporally contiguous with the reinforcer or punisher, and it is the resultant size of the relative, and necessarily temporally extended correlation between these degraded memories that affects subsequent performance. This, I suggest, is what occurs during learning and adaptation to a new environment. But, the correlations being based on extended relations over time means that when adaptation has substantially occurred, it is these molar correlations that mostly (but not entirely) control behaviour. Which approach—molecular or extended—is best depends very much on whether we are interested in describing behaviour during learning and adaptation, or whether we wish to describe steady-state behaviour. In this I find myself in very substantial agreement with Hineline (2001) who argued that molecular and extended (molar) analyses are not mutually exclusive.

Perhaps we should cease telling our students that “reinforcers increase the probability of responses that they follow” but rather that “response probability is increased when responses are followed by reinforcers”.

Michael Davison

The University of Auckland, New Zealand

References

- Baum, W. M. (2002). From molecular to molar: A paradigm shift in behavior analysis. Journal of the Experimental Analysis of Behavior, 78, 95-116.

- Davison, M., & Nevin, J.A. Stimuli, reinforcers, and behavior: An integration. Monograph. Journal of the Experimental Analysis of Behavior, 71, 439-482.

- Dinsmoor, J. A. (2001). Stimuli inevitably generated by behavior that avoids electric shock are inherently reinforcing. Journal of the Experimental Analysis of Behavior, 75, 311-333.

- Green, L. & Myerson, J. (2004). A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin, 130, 769-792

- Hineline, P.N. (2001). Beyond the molar-molecular distinction: We need multiscaled analyses. Journal of the Experimental Analysis of Behavior, 75, 342-347.

- Krägeloh, C. U. & Davison, M. (2003). Concurrent-schedule performance in transition: Changeover delays and signaled reinforcer ratios. Journal of the Experimental Analysis of Behavior, 79, 87-109.

- Mazur, J.E. (1987). An adjusting procedure for studying delayed reinforcement. In M. L. Commons, J. E. Mazur, J. A. Nevin & H. Rachlin (Eds.), Quantitative analyses of behaviour, Vol. 5: The effects of delay and of intervening events on reinforcement value (pp. 55-73). Mahwah, NJ: Erlbaum.

- Nevin, J.A., Davison, M., & Shahan, T.A. (2005). A theory of attending and reinforcement in conditional discriminations. Journal of the Experimental Analysis of Behavior, 84, 281-303.

- Rachlin, H. (2006). Notes on discounting. Journal of the Experimental Analysis of Behavior, 85, 425-435.

Acknowledgements

Thanks to William M. Baum, Douglas Elliffe, and J. Anthony Nevin for many productive conversations on these topics. The views expressed, though, remain my own and probably will continue so to be.